Inhaltsverzeichnis

Comparing Ranking Methods and Likert-type scales

Likert-type scales are considered as a popular tool in questionnaires and evaluations to gather reviews and opinions from participants taking part in the evaluation process. The need for new Likert-type scale alternatives has arisen through a set of scientifically ambiguous guidelines for the creation of Likert-type scales on the one hand, and through various criticisms of incorrect scientific analysis of Likert-type scales on the other. Within the evaluation process, several objects of investigation can be ranked and thus can be rated over the assigned rank. Now, do ranking methods achieve the same results/winners as Likert-type scales? A preliminary (18 German and Colombian participants) and a main study (24 participants) were conducted to investigate this topic. The results of the explorative pre-study lead us to the design of the ranking method for the main-study. Our findings of the main-study show that multiple ranking scales (evaluated with the Schulze method) achieve the same results when determining a ranking winner, as Likert-type scales. For this reason, it can be assumed that multiple ranking scales are an alternative to the commonly used Likert-type scales. Due to a mistake in our study design, we cannot proof that participants are faster with multiple ranking scales compared to Likert-type scales, further studies have to answer this question.

Members: Julia Sageder, Ariane Demleitner, Oliver Irlbacher

Keywords: ranking, Condorcet, Schulze method, Likert, evaluation, Computational Social Choice, COMSOC, comparison, scales, voting, multiple ranking, versus ranking, pairwise ranking

Original Description (outdated):

Multiple ranking methods (e.g. Condorcet, Likert, Point) are compared and evaluated in this project for determining the best set or combination of ranking methods. As a result, a „best suited“ ranking questionnaire will be generated, that is structured and improved steadily by examining a pre-study/pre-studies and a final main-study.

Background (outdated)

When ranking a set of candidates, there is a bunch of ranking methods to find out which candidate wins the voting and therefore, wins the highest rank in comparison. For example, when comparing websites (A, B, C, D, E), we want to select the “best” website. The “best” can be clarified by multiple criteria that must be assessed. This can be done by evaluating and comparing presentational forms of the questionnaire (e.g. the design or the complexity of the websites) or by evaluating and comparing calculation methods for computing the ranking outcome. Each vote for the “best” subject, e.g. website, is a subjective decision. By choosing suitable types, sequences or expressions of questions or options, the ranking is intended to get closer to objectivity. To identify saliences and to gradually approach research questions, a custom questionnaire structure has to be generated and verified by conducting a pre-study/pre-studies and a main-study. As a result, the “best” ranking questionnaire structure is intended to be the one that proximately matches a set of expectation values.

Goals (outdated)

- Literature research for different questionnaire designs and ranking methods in existing studies (especially for HCI)

- Collection of diverse questionnaire forms (types, choices, sequences, scales, single vs. multiple options, etc.)

- Collection of most common ranking methods (Likert scale, point voting, percentage voting, Condorcet voting, e.g. Schulze method)

- Definition of ranking subject (e.g. websites, interfaces, psychological or another context)

- Definition of “objectivity” (e.g. expert assessment or heuristics for website or interface complexity, estimation of time or length relevant context)

- Design, implementation and execution of pre-study/pre-studies and main-study to exclude unsuitable forms/methods, to discover research questions and to verify a custom questionnaire structure step by step (in consideration of expected values)

Updates

Writing of the Rebuttal (2019-03-08)

To help to improve our paper, seven people took the time to write a review. (more...)

Writing of the paper (2019-02-19)

After conducting the user tests (pre-study, main-study), central thoughts and findings were discussed and the writing of the paper was initiated. (more...)

Results of the mainstudy (2019-02-18)

Evaluation of the mainstudy and analyzing the data to see, if ranking is an alternative to likert scales (more...)

Finish of mainstudy concept (2019-01-26)

After analyzing the results from the preliminary study, we decided to conduct the main study to determine whether ranking was an alternative to likert scales using appropriate data as ground truth. (more...)

Finishing the conduction of the pre-study (2019-01-08)

The conduction of the pre-study is nearly over by now, only a few participants remain until tomorrow. (more...)

Finish of prestudy concept (2018-12-28)

After the expert interview and a consequential discussion within the group, we made it to a first concept of the pre-study. (more...)

Discussion of research subject (expert interview) (2018-12-14)

After researching multiple literature all around ranking studies and possible options of research questions and approaches, we discussed previous considerations within the group and within an expert interview. (more...)

Initial concept of the project (2018-11-21)

After initially getting used to the topic of the project by researching literature for ranking and ranking methods, we collected and discussed information (e.g. methods, outcome, background, goals). (more...)

Further Resources and References

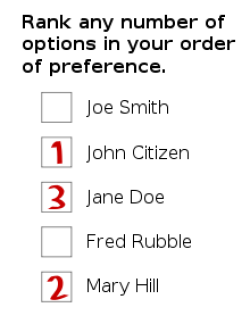

Image source: https://upload.wikimedia.org/wikipedia/commons/thumb/1/18/Preferential_ballot.svg/220px-Preferential_ballot.svg.png

- Felix Brandt, Vincent Conitzer, Ulle Endriss, and Jerome Lang (Eds.). 2016. Handbook of computational social choice. Cambridge University Press, New York.

- Yann Chevaleyre, Ulle Endriss, Jerome Lang, and Nicolas Maudet. 2007. A Short Introduction to Computational Social Choice. SOFSEM 2007: Theory and Practice of Computer Science. SOFSEM 2007. Lecture Notes in Computer Science 4362 (2007).

- Paul M. Fitts. 1954. The information capacity of the human motor system in controlling the amplitude of movement. Journal of Experimental Psychology 47, 6 (1954), 381–391.

- Fajwel Fogel, Alexandre d’Aspremont, and Milan Vojnovic. 2016. Spectral Ranking using Seriation. Journal of Machine Learning Research 17 (2016), 1–45.

- Maurits Kaptein, Clifford Nass, and Panos Markopoulos. 2010. Powerful and Consistent Analysis of Likert-Type Rating Scales. CHI 2010 (2010), 2391–2394.

- Rensis Likert. 1932. A Technique for the Mesurement of Attitudes. Archives of Psychology 22 (June 1932), 5–55.

- N. Menold and K. Bogner. 2016. Design of Rating Scales in Questionnaires. GESIS Survey Guidelines. GESIS - Leibniz Institute for the Social Sciences, Mannheim. https://doi.org/10.15465/gesis-sg_en_015

- Judy Robertson. 2012. Likert-type Scales, Statistical Methods, and Effect Size. Judy Robertson writes about researchers’ use of the wrong statistical techniques to analyze attitude questionnaires. Commun. ACM 55, 5 (May 2012), 1–45. https://doi.org/10.1145/2160718.2160721

- Joerg Rothe, Dorothea Baumeister, Claudia Lindner, and Irene Rothe. 2012. Einfuehrung in Computational Social Choice. Individuelle Strategien und kollektive Entscheidungen beim Spielen, Waehlen und Teilen. Spektrum Akademischer Verlag Heidelberg.

- Markus Schulze. 2003. A new monotonic and clone-independent single-winner election method. Voting Matters 17 (2003), 9–19.

- Markus Schulze. 2018. The Schulze Method of Voting. CoRR abs/1804.02973 (2018). http://arxiv.org/abs/1804.02973

- Kerstin Voelkl, Christoph Korb, and Christoph Korb. 2017. Deskriptive Statistik - Eine Einführung für Politikwissenschaftlerinnen und Politikwissenschaftler (1. aufl. ed.). Springer-Verlag, Berlin Heidelberg New York.