Inhaltsverzeichnis

A research of researches (2019-01-31)

Tagged as: blog, AR, publications, classification, technology, evaluation

Group: G

In this blog article we will present you the process and progress of our state-of-the-art AR paper analysis.

Hello everyone!

In this blog article we would give an update about the progress of our state-of-the-art AR paper analysis. Within the last weeks we were busy creating a plan for selecting, and retrieving, sorting and analyzing state-of-the-art papers in the area of AR. Our methodology follows proposals from the literature that we have slightly adapted to conform to our requirements. The goal of the paper analysis is to analyse and collect, which AR technologies were used in state-of-the-art AR papers and especially how these applications were evaluated, so that we could indicate the frequency of the used methods, identify gaps and trends and compare them with results from previous work.

We already defined our selection criteria in the blog article “Selecting state-of-the-art papers (2018-12-12)”. As already described there, we analyzed publications from the ISMAR and CHI, two major conferences in the field of Augmented Reality. Based on Duenser, Grasset, & Billinghurst (2008) the ACM Digital Library and IEEE Xplore are two main publishers in the field of AR. Therefore they used these as sources for their paper analysis.

Regarding the time interval for our publication analysis we finally decided us for the years 2015 to 2017, succeeding the work of Dey, Billinghurst, Lindeman, & II (2016) who analysed AR publications from the years 2005 to 2014. Against our initial idea we decided to drop papers from the years 2018, as the papers from ISMAR 2018 are not yet in the database of IEEE, so they can not be searched based on our keyword searching approach (State: January 2019) and therefore could not be analyzed.

Paper acquisition

After defining our paper selection criteria we continued our research with the acquisition of the publications and the creation of a corpus. Based on that, we decided to collect papers based on a set of keywords entered in the search engine of the publishers’ websites. To be able to compare our methodology with approaches from the literature, we decided to directly take over the keywords from Duenser, Grasset, & Billinghurst (2008), which were also used by other surveys in the field of AR (Dey et al. , 2016). They are listed in the following:

- „augmented reality“ AND „user evaluation“

- „augmented reality“ AND „user evaluations“

- „augmented reality“ AND „user study“

- „augmented reality“ AND „user studies“

- „augmented reality“ AND „feedback“

- „augmented reality“ AND „experiments“

- „augmented reality“ AND „experiment“

- „augmented reality“ AND „pilot study“

- „augmented reality“ AND „participant“ AND „study“

- „augmented reality“ AND „participant“ AND „experiment“

- „augmented reality“ AND „subject“ AND „study“

- „augmented reality“ AND „subject“ AND „experiment“

As the focus of Duenser, Grasset, & Billinghurst (2008) lied primarily on user evaluations, we expanded the set of keywords by the ones listed below, because we also analyse the used AR technologies in our survey.

- „augmented reality“ AND „application“

- „augmented reality“ AND „applications“

- „augmented reality“ AND „prototype“

- „augmented reality“ AND „prototypes“

- „augmented reality“ AND „tracking“

- „augmented reality“ AND „display“

- „augmented reality“ AND „displays“

- „augmented reality“ AND „user interface“

- „augmented reality“ AND „user interfaces“

- „augmented reality“ AND „input“

For the retrieval of the publications we used the search engine on the websites ofIEEE Xplore and the ACM Digital Library, i.e. the publishers of the proceedings for ISMAR resp. CHI. There we entered each of the keywords listed above, one after another. We filtered the results on the IEEE website by year and chose “ISMAR” and “ISMAR-Adjunct” (for 2016 and 2017) and “ISMAR” and “ISMAR - Media, Art, Social Science, Humanities and Design” (for 2015) as publication titles. The results from the ACM Digital Library were filtered by year, and “CHI” was selected as proceeding series and “CHI’<yy>” as event. We imported all resulting publications into Zotero and excluded all posters, workshop summaries, demos etc. and consequently only took papers into account for our analysis.

Based on this approach we found approximately 360 papers related to AR, where approximately 170 could be excluded, as they did not correspond to our paper definition or were not accessible for us, resulting in approximately 170 papers left for our analysis. During the analysis some papers will also be identified as false positives, i.e. they are not related with the topic of AR, although they met the keyword criteria.

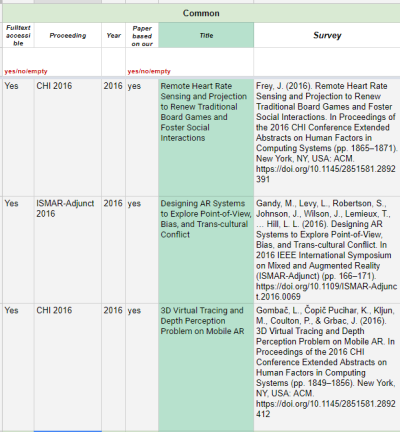

Paper classification table

After the literature review creation process from Brocke et al. (2009) (cf. blog xy) we are currently in Phase IV, as we began to analyse the papers we found during our search process. Following this approach we created a paper classification table and used the so-called concept matrix from Webster and Watson (2002) as a reference, and adapted it slightly. In this table we divided the analyzed topics into multiple subtopics (cf. list below). There each paper was added with its metadata, e.g. title or bibliography, and complemented with the analysis results afterwards. The papers within the table are sorted by year and within a year by conference and within a conference by alphabetical order.

A paper is represented by one row, containing the following information as columns, if available:

- Common (Metadata of a paper)

- Full text accessible: If a paper is available in full text or not and therefore, if it can be included in our analysis

- Proceeding: Name of conference

- Year: Year of publication

- Paper based on our definition?: If the publication is a paper and no poster, workshop summary etc.

- Title: Title of publication

- Survey: bibliography

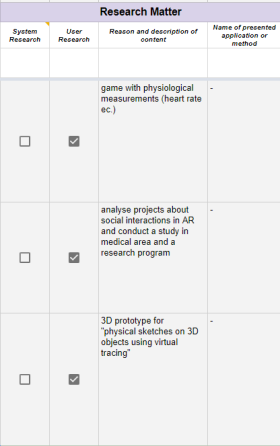

- Research Matter (general overview over content of publication)

- System research/user research: the research subject of the publication, i.e. if a system or the user evaluation is in the focus

- Reason and description of content: description of the decision from the previous column and short summary of the publication’s content

- Name of presented application or method

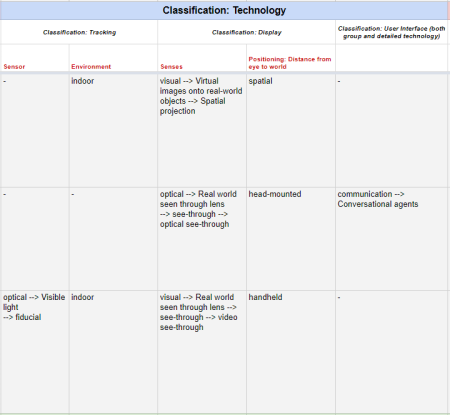

- Classification: Technology (information about the used AR technologies)

- Classification: Tracking: both sensor and environment

- Classification: Display: both senses and positioning, i.e. distance from eye to world

- Classification: User Interface: both group and detailed technology

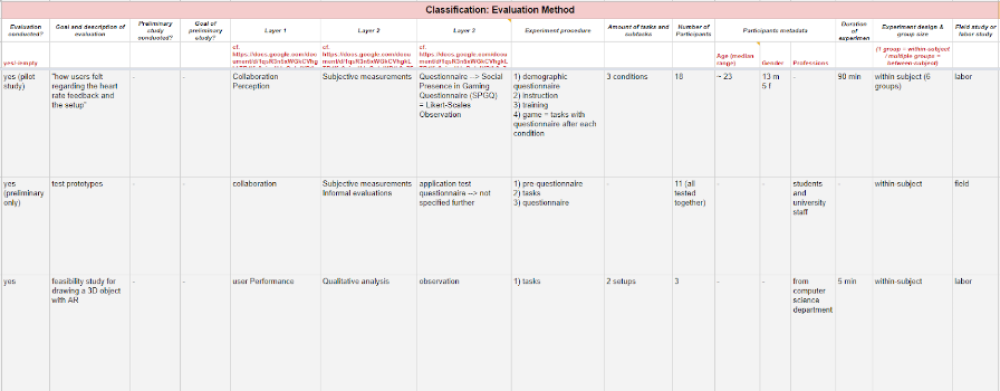

- Classification: Evaluation Method (information about the conducted evaluation)

- Evaluation conducted?: if an evaluation was conducted in the publication

- Goal and description of evaluation

- Preliminary study conducted?: if a pilot or preliminary study was conducted in the publication

- Goal of preliminary study?

- Layer 1: based on Duenser, Grasset und Billinghurst (2008) (cf. blog article A new year and new results (2019-01-04) )

- Layer 2: based on Duenser, Grasset und Billinghurst (2008) (cf. blog article A new year and new results (2019-01-04) )

- Layer 3: based on Duenser, Grasset und Billinghurst (2008) (cf. blog article A new year and new results (2019-01-04) )

- Experiment procedure: structure of evaluation, e.g. training session, tasks, post-questionnaire

- Amount of tasks and subtasks

- Number of Participants

- Participants’ metadata: age (median), gender, profession

- Duration of experiment

- Experiment design & group size: within-subject or between-subject design

- Field study or labor study: if the study took place in the labor or outside the labor

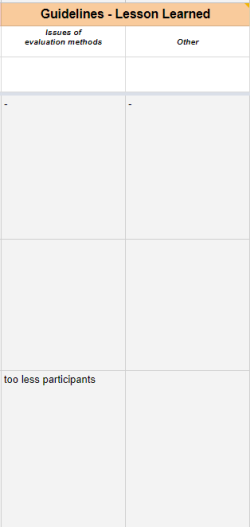

- Guidelines: Lessons Learned

- Issues of evaluation methods: observed problems, e.g. missing of some classification categories (e.g. for input devices like game controllers) or a vague description of participants

- Other

We divided the amount of papers between us three, resulting in approximately 56 papers for each of us to analyse. Our deadline for this analysis is targeted on February 7th and the finalisation of the analysis’ results on February 15th. The best approach for analysing the results will arise during our paper analysis.

That was all for today. See you next time!

References

Brocke, Jan vom; Simons, Alexander; Niehaves, Bjoern; Niehaves, Bjorn; Reimer, Kai; Plattfaut, Ralf; and Cleven, Anne, „RECONSTRUCTING THE GIANT: ON THE IMPORTANCE OF RIGOUR IN DOCUMENTING THE LITERATURE SEARCH PROCESS“ (2009). ECIS 2009 Proceedings. Paper 161. http://aisel.aisnet.org/ecis2009/161

Dey, A., Billinghurst, M., Lindeman, R. W., & II, J. E. S. (2016). A Systematic Review of Usability Studies in Augmented Reality between 2005 and 2014. In 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR-Adjunct) (pp. 49–50). https://doi.org/10.1109/ISMAR-Adjunct.2016.0036

Duenser, A., Grasset, R., & Billinghurst, M. (2008). A Survey of Evaluation Techniques Used in Augmented Reality Studies. ACM SIGGRAPH ASIA 2008 …. https://doi.org/10.1145/1508044.1508049

Webster, J., & Watson, R. T. (2002). Guest Editorial: Analyzing the Past to Prepare for the Future: Writing a literature Review, 11.