**Dies ist eine alte Version des Dokuments!**

MR&VR Surveys and evaluation methods (2019-01-18)

Tagged as: blog, mr, vr, survey, evaluation mehtods

Group: G

an overview about evaluation methods and information about other fields MR and VR

In today’s blog article we will show you guys some information about surveys in other fields like Mixed Reality (MR) and Virtual Reality (VR). After that we will give you an overview on all evaluation methods that exist.

MR&VR Surveys

Firstly we wanted to know if there are other survey papers (= state-of-the-art/science is presented in a systematic way (Åström&Eykhoff 1971)) in other fields like mixed reality (MR) and virtual reality (VR). This was important for us, because we wanted to gain some information how the paper collection was done in these fields beside AR. Unfortunately the information about the selecting and collecting papers are very few and almost not there in the field of AR. The most important information that we needed was, how the other authors selected the right papers in their researches and which venues were used or which criteria were used. Unfortunately none of the surveys that we found about MR and VR showed the way how they collected or extracted the papers nor which criteria was used etc. for their survey paper. There were none of the informations that we wanted to find. So that’s why we will orient us to the Dünser, Grasset & Billinghurst (2008) survey and use it as a basis for our research. In the next blog article we will show you a detailed scheme like which keywords and which venues will be used for the research, so that we know which paper we have to include or exclude. You can have a look here.

Evaluation Methods

The second important research was the research about the evaluation methods. Here we wanted to find evaluation methods that are used in human-computer fields. The main reason for that is after our final research about the AR papers, where we use the selection criteria mentioned briefly above and detailed in the last blog article, we want to categorize the evaluation methods of these papers and then find out which evaluation methods were not used. Additional to that we want to find also the frequency of the used evaluation methods. So that’s why it is important that we have a look to every evaluation method and make an overview. For this we prepared some diagrams.

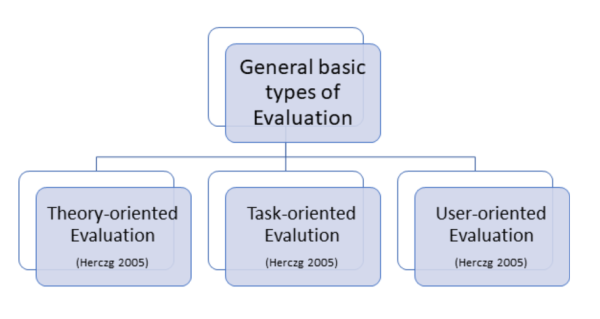

The graphic below gives you an overall overview about the general basic types of evaluation.

In a theory-oriented evaluation gestalt principles derived from“theories” (norm or style guides) are checked. It is especially suitable for standardized tasks and unified user groups. On the other side in task-oriented evaluation the basis are the determined task descriptions (you can use a GOMS (goals, operators, methods and selection rules) model). The goals here are to investigate the naturalness of the system, estimating the learning effort and checking the completeness of the system. And the user-oriented evaluation is a very important evaluation procedure, because in this one the users are included. There are different methods used like surveys, log files, controlled experiments, think aloud or verbal reports etc. (Herczeg 2005)

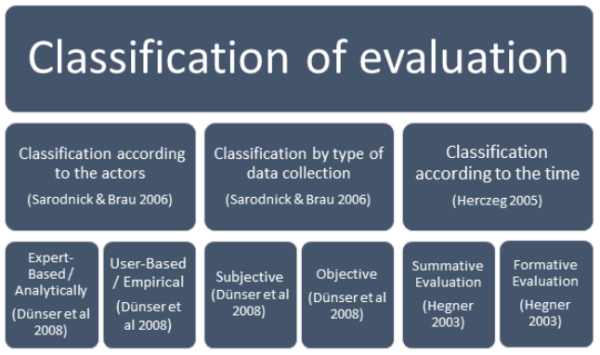

Beside that i will show you guys a classification of the evaluation in the following graphic below.

So to start of the description of this graphic above we will began with the classification according to the actors and explain the expert-based / analytical evaluation. The evaluation is carried out by usability experts based on guidelines. The typical methods here are heuristic evaluation and cognitive walkthrough. An user-based / empirical evaluation gets the data through surveys and observations with the actual user. The typical procedures here are questionnaires and thinking aloud. This section is very important for aus, because Dünser et al 2008 is mainly using the user-based approach of the evaluation for making their survey paper. This will be our focus too, for our classification of our research papers in the layer evaluation methods. As for the classification by type of data collection there are subjective or objective evaluation. At a subjective evaluation you obtain so called “soft” data, e.g. if the use of the system is convenient, enjoyable etc. Typical methods here will be discussion between users, user survey, thinking-aloud protocols. At a objective evaluation you will get quantitative data, such as execution time or learning times as well as error rates. Typical methods are observation logs, log file recording and video recording. (Sarodnick & Brau, 2006) And lastly the classification according to the time is divided to summative and formative evaluation. The summative evaluation is the final and summed up evaluation of the overall quality at the end of the system development. And the formative evaluation is used during system development. (Herczeg 2005)

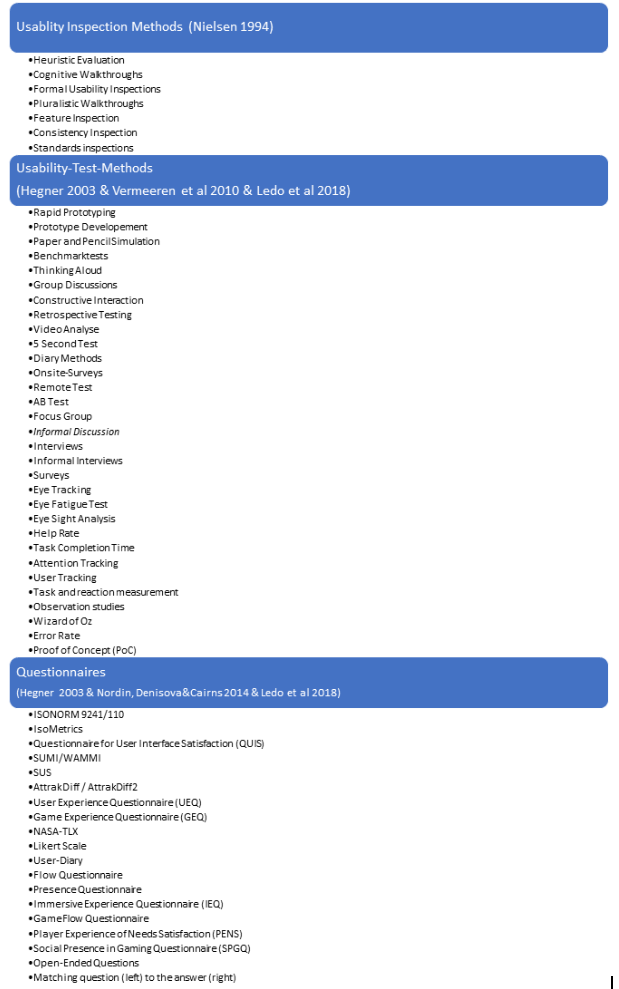

Lastly a list of evaluation methods:

And here a list of all UX (user experience) Evaluation Methods (retrieved from http://www.allaboutux.org/all-methods, 02.02.2019) - 2DES - 3E (Expressing Experiences and Emotions) - Aesthetics scale - Affect Grid - Affective Diary - Attrak-Work questionnaire - Audio narrative - AXE (Anticipated eXperience Evaluation) - Co-discovery - Context-aware ESM - Contextual Laddering - Controlled observation - Day Reconstruction Method - Differential Emotions Scale (DES) - EMO2 - Emocards - Emofaces - Emoscope - Emotion Cards - Emotion Sampling Device (ESD) - Experience clip - Experience Sampling Method (ESM) - Experiential Contextual Inquiry - Exploration test - Extended usability testing - Facereader - Facial EMG - Feeltrace - Fun Toolkit - Geneva Appraisal Questionnaire - Geneva Emotion Wheel - Group-based expert walkthrough - Hedonic Utility scale (HED/UT) - Human Computer trust - I.D. Tool - Immersion - Intrinsic motivation inventory (IMI) - iScale - Kansei Engineering Software - Living Lab Method - Long term diary study - MAX – Method of Assessment of eXperience - Mental effort - Mental mapping - Mindmap - Multiple Sorting Method - OPOS – Outdoor Play Observation Scheme - PAD - Paired comparison - Perceived Comfort Assessment - Perspective-Based Inspection - Physiological arousal via electrodermal activity - Playability heuristics - Positive and Negative Affect Scale (PANAS) - PrEmo - Presence questionnaire - Private camera conversation - Product Attachment Scale - Product Experience Tracker - Product Personality Assignment - Product Semantic Analysis (PSA) - Property checklists - Psychophysiological measurements - QSA GQM questionnaires - Reaction checklists - Repertory Grid Technique (RGT) - Resonance testing - Self Assessment Manikin (SAM) - Semi-structured experience interview - Sensual Evaluation Instrument - Sentence Completion - ServUX questionnaire - This-or-that - Timed ESM - TRUE Tracking Realtime User Experience - TUMCAT (Testbed for User experience for Mobile Contact-aware ApplicaTions) - UTAUT (Unified theory of acceptance and use of technology) - UX Curve - UX Expert evaluation - UX laddering - Valence method - WAMMI (Website Analysis and Measurement Inventory) - Workshops + probe interviews

The mentioned methods above are a briefly overview of evaluation methods in the context of usability. There are a ton other evaluation methods, which are used in different fields like marketing or economy in general and are not mentioned above. We focused us to the ones in the human and computer science field.

We wish you a wonderful weekend!

References

Åström, K. J., & Eykhoff, P. (1971). System identification—a survey. Automatica, 7(2), 123-162.

Duenser, A., Grasset, R., & Billinghurst, M. (2008). A Survey of Evaluation Techniques Used in Augmented Reality Studies. ACM SIGGRAPH ASIA 2008 …. https://doi.org/10.1145/1508044.1508049

Herzceg, M. (2005). Softwareergonomie. München: Oldenbourg Wissenschaftsverlag.

Hegner, M (2003): Methoden zur Evaluation von Software. IZ-Arbeitsbericht Nr. 29.

Ledo, David, Steven Houben, Jo Vermeulen, Nicolai Marquardt, Lora Oehlberg, und Saul Greenberg. „Evaluation Strategies for HCI Toolkit Research“. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems - CHI ’18, 1–17. Montreal QC, Canada: ACM Press, 2018. https://doi.org/10.1145/3173574.3173610.

Nielsen, J. (1994, April). Usability inspection methods. In Conference companion on Human factors in computing systems(pp. 413-414). ACM.

Nordin, A. I., Denisova, A., & Cairns, P. (2014, October). Too many questionnaires: measuring player experience whilst playing digital games. In Seventh York Doctoral Symposium on Computer Science & Electronics (Vol. 69).

Sarodnick, F & Brau, H (2006): Methoden der Usability Evaluation - Wissenschaftliche Grundlagen und praktische Anwendung. Bern: Verlag Hans Huber.

Vermeeren, A. P., Law, E. L. C., Roto, V., Obrist, M., Hoonhout, J., & Väänänen-Vainio-Mattila, K. (2010, October). User experience evaluation methods: current state and development needs. In Proceedings of the 6th Nordic Conference on Human-Computer Interaction: Extending Boundaries (pp. 521-530). ACM.

http://www.allaboutux.org/all-methods, retrieved 02.02.2019